Previously, we discussed the difference between scales, scores, and definitions of proficiency. We'll continue as we dig in and discuss student growth.

The easiest method to determine growth is to take a measurement, take a second measurement at a later time, and subtract the results. The difference will equal a growth number and, if high enough, it will equal progress.

STAAR has to do this; it’s the only way to be consistent and equitable on a state-level, criterion-referenced assessment. But STAAR results also translate into Progress Levels with descriptors. For example, groups of students can earn Limited Progress from one year to the next. We know, regardless of how they performed, this means it was not to the degree expected from one grade level to the next.

But we measure linear growth in this way because STAAR in one year and STAAR in the next use the same scale. Our local assessments often do not. So instead, we validate our assessments with practical professional knowledge, drawing conclusions from the results.

We then vary our assessments based on these desired conclusions. We assign warm-up activities, tickets out, quizzes, exams, projects, and performance tasks. And… that’s all we need to determine if it’s jacket or sweater weather. We aren’t assigning accountability ratings; we’re trying to figure out who knows what and what kinds of interventions/enrichments will be necessary.

Student growth is a function of student performance over time. If it helps, we can make up a silly but impressive-looking formula to express this:

Now, we have established that student performance does not have to be on the same scale as long as you don’t want a number. In our classrooms, we are not responsible for developing accountability ratings. We don’t need any fancy-pants math to look at some results and draw conclusions about learning. And if we are looking at a battery of performance results from a timeframe, we can figure out what those mean, too. As we know, the conclusions and the action plan from those conclusions are what really matter for us.

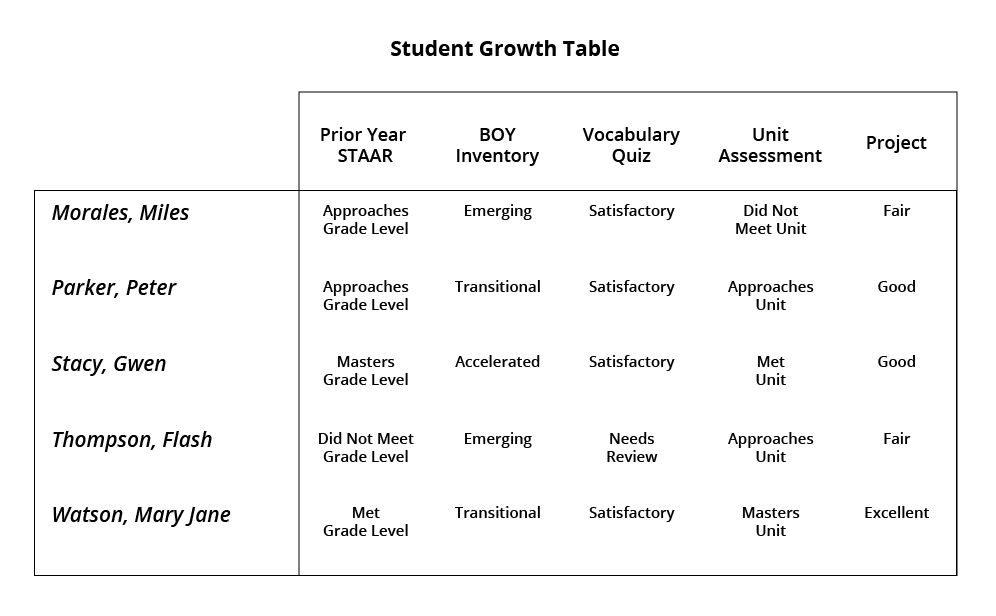

So let's update our visual to something more practical:

Here we have a set of students and a series of assessments that have meaning to the educator who compiled them. We can assume that the Vocabulary Quiz, Unit Assessment, and Project had summative scores recorded, but note we do not include those here. Instead, we have performance levels translated from various scales: STAAR scale score, inventory scale, two percent scores, and a rubric.

This is why I like SLOs. With an SLO, a teacher, or team of teachers, decides what to focus on and then collects student performance at different times for that focus area. It can include only rubric results (Skill Profiles and Targets), or consist of other evidence of learning as necessary. The focus is on the next steps. With SLOs, data inform not only pedagogy but professional learning, as well. Instructional leaders can coach individual teachers or teams based on specific needs. While one person may struggle with deconstructing data trends, another may need mentoring on how their professional learning deficits might relate to those trends. A team might need help working together developing common strategies for common issues.

Data and numbers are part of the program, but they are not THE program. Student growth is about teacher impact. And teacher impact is about what we do as educators once we learn what students have learned - and what they have not.

How do you track student growth and progress in your classroom or on your campus? I bet you’re doing something. I bet you have several current practices, some that you’ve been doing for years. Do you have a spreadsheet? A data wall? A binder? Do you have student-level artifacts students use to create goals for themselves as they progress through the school year?

Teaching will always include progress monitoring. But, with the hot-topic buzz-wordiness of "Student Growth and Progress" dominating discussions right now, the real question is: "How connected and formalized are your current practices for monitoring student performance over time?"

.png)

.png)