In early 2023, the world got its first glimpse of ChatGPT, a generative AI that spurred a competitive outbreak of other AI and AI-fueled software add-ons. Ever since, our society has been playing catch-up in terms of laws, protections, and use cases.

As educators, you’re on the frontlines of this movement toward AI adoption. Whether you’re excited, concerned, or both, you may be feeling overwhelmed as you try to educate yourself on the current state of AI, especially in regards to education.

We wanted to give you a solid starting point. In this blog, we’ve answered ten important questions in one place. We hope they can help you start conversations about thoughtfully adopting AI in schools and what that means for staff and students.

Index

What does the law say about protecting student safety and privacy when using AI in the classroom?

What is a Large Language Model (LLM)?

How can you tell when something is “AI” versus an algorithm?

Is AI hurting our children’s capacity to learn?

Can using AI interfere with best practices?

What are the ethical considerations of using AI to make resources?

Is AI bad for the environment?

Should students be receiving AI education in the classroom?

Student safety should be the number one consideration when adopting new technology in the classroom. While there is one federal law governing student privacy, laws can vary by state. There are a few policies you should be aware of before signing on with an AI tool.

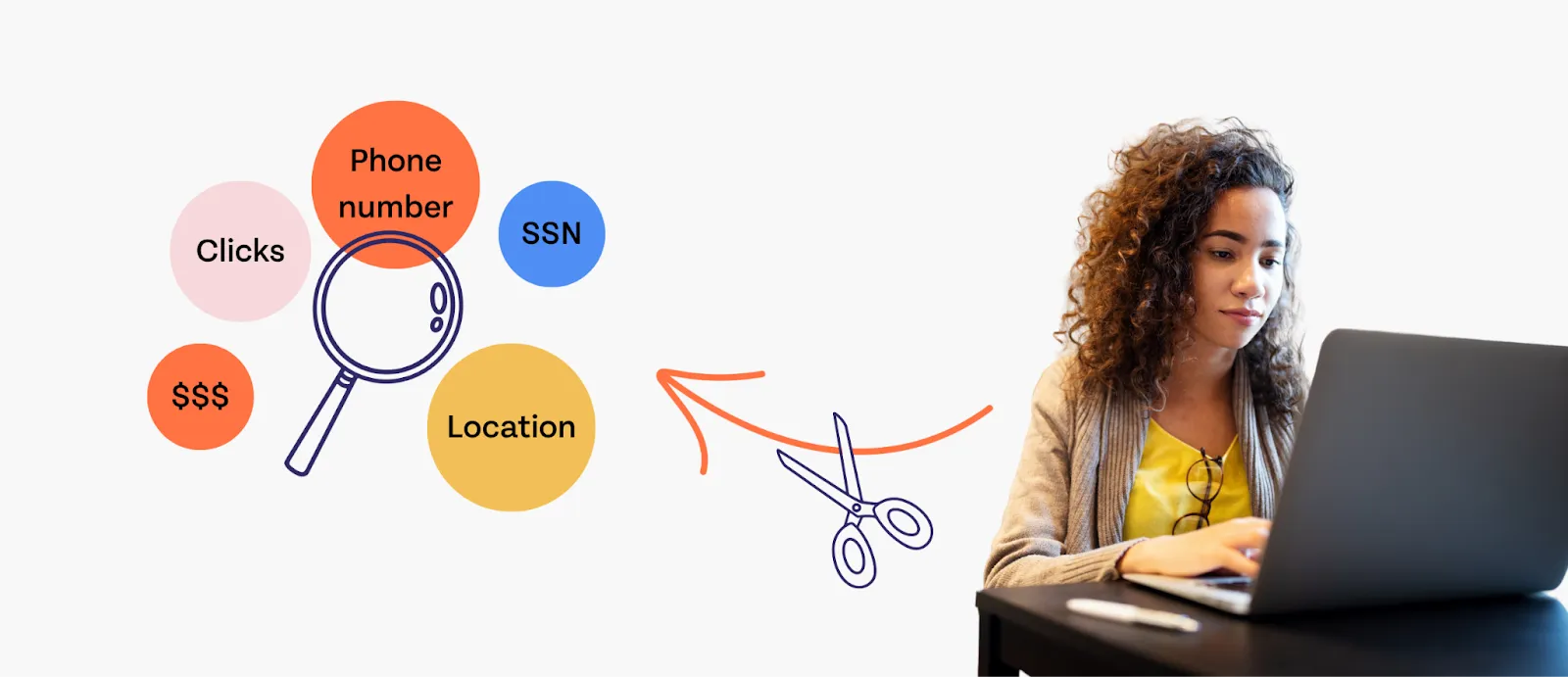

Many tools (especially free tools) collect user data to sell to other companies. While they often state this in their user agreements, it may be difficult for the average person to find and decode the language around data collection.

One article from CNBC lists the kinds of data that data brokers collect, including:

- Name, address, and contact information

- Credit scores and payment history

- What you buy and where you buy it

- Personal health information, such as medications you take and health conditions you suffer from

- Likes, dislikes, and ad-clicking behavior

- Real-time location data, such as where you spend most of your time

- Religious and political beliefs

- Relationships with others

One major concern about collecting this data is that few regulations dictate how it can be used. Worst case scenario: “Bad actors” (those who engage in harmful or unethical behavior) can purchase personal information to steal identities. Best case scenario: Your data is used to create extremely targeted ad campaigns. Obviously, this is concerning when we consider that this data is being collected from children. According to the Pew Research Center, most Americans believe that data collection practices with AI will have unintended consequences. Makes sense, considering that AI can collect extremely personal information in large quantities.

The Family Educational Rights and Privacy Act (FERPA) protects student data from unauthorized use and/or sharing with a third party. The Department of Education states that to be FERPA compliant, teachers should discuss the tools or services they’d like to adopt with their administration first, and we think that’s a pretty solid recommendation.

Ask first, download later.

Similarly, the Children’s Online Privacy Protection Act (COPPA) requires parental consent before children under 13 can use a software or service.

If students under 13 are inputting personal information into a software, it’s essential to make sure that it is COPPA compliant.

Generally, it’s a good idea to leave software adoption discussions to your administrators and IT team. If you see something you like, send it up the chain! They have to do the hard work of making sure that it complies with state and federal laws and protects student data. If you’re one of those people who have to ensure student data security, here are some additional legal requirements to consider.

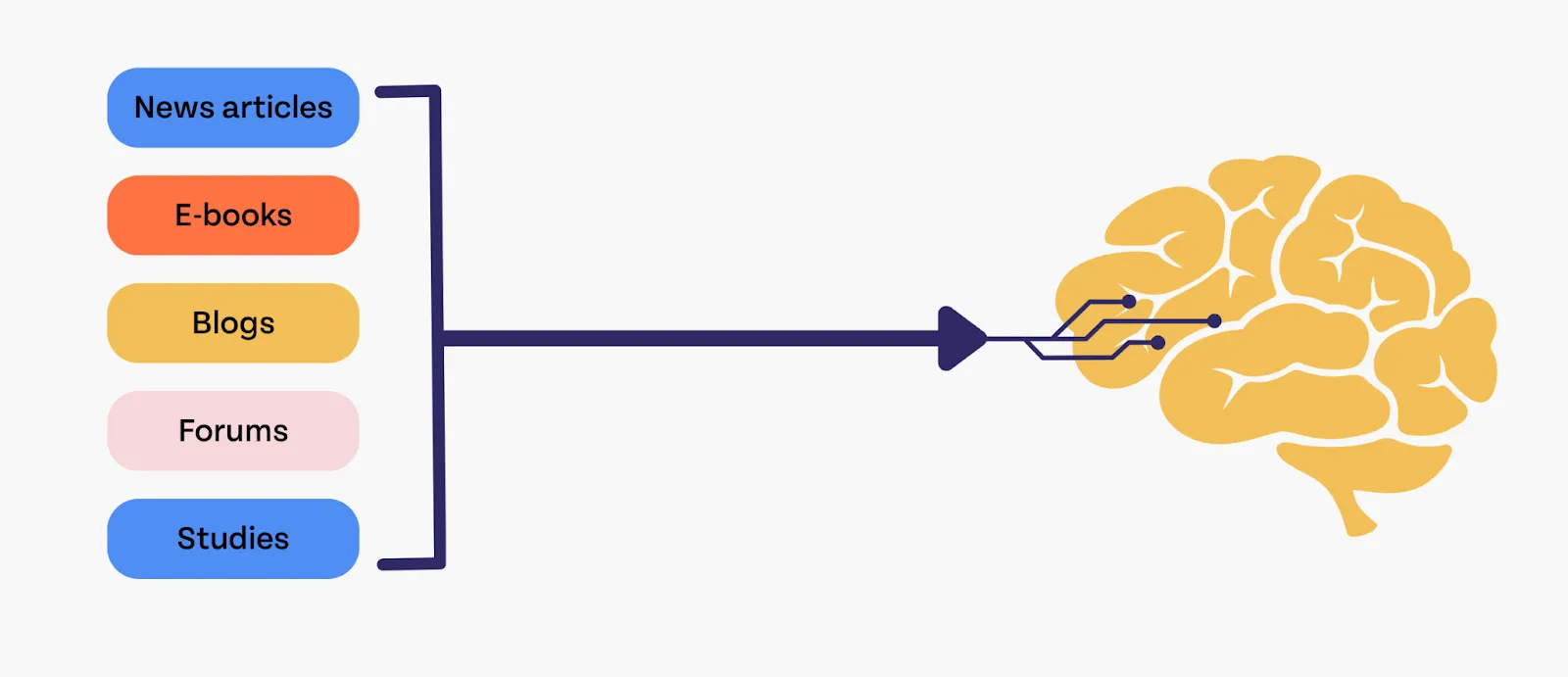

Most people are familiar with Chat GPT, one of the most popular LLMs on the market. These forms of AI are trained on large quantities of text so they can return text answers that are (ideally) factual, accurate, and human-sounding.

LLMs are the subject of some controversy because of the way they are trained. As an educator, here are some things you may need to know about how this information is collected, as well as whether it’s as trustworthy as it seems:

- There have been several lawsuits from publication companies, such as The New York Times, whose copyrighted publications may have been stolen and used to train Large Language Models. As a result, responses from LLMs contain language and information that they may not have a legal right to use.

- Many larger companies have come to agreements about how their publications can be used to train AI, but smaller publishers don’t have the money to stand up against these large companies to demand compensation for the use of their work in training AI.

- The language an LLM uses comes from human-written, flawed, and potentially biased sources. Many companies that create LLMs attempt to mitigate bias in their models by vetting information. But with the volume of data used to train models, this is often impossible at scale. Things fall through the cracks. While the AI is returning computer-generated responses, it was taught to “think” the way that it thinks based on huge quantities of human-written information can be opinionated, biased, or even outright false.

- LLMs are known to “hallucinate.” This means that the AI detects a pattern that does not exist or generates an answer that is factually untrue. These hallucinated answers are confidently delivered and may even include citations that lead to nowhere. This is why it’s incredibly important to fact-check anything that an LLM generates.

So, based on these pieces of information, educators need to be wary of inaccurate, biased responses from Large Language Models. If they’re using them to create resources or aid in their work, they should be sure to fact-check and personalize their outputs to make sure no bias or inaccuracy creeps in.

It would also be beneficial to speak to students about these considerations, as they’re already using them to help with essay writing, assignment completion, and idea generation.

What is generative AI?

It may be worth discussing that not all AI products are large language models. Generative AI can produce many different types of content depending on how it was trained and its purpose.

Generative simply means that it “generates” content. This could be images, music, or even video. Some are even getting particularly good at creating fake movie scenes with real actors. As this technology advances, most feel a growing concern about “deep fakes” and misinformation.

Texas is one of few states that has passed laws banning the use of this technology for political ads. Otherwise, we don’t have much legal protection yet, so it may be a good idea to keep an eye on these things as they develop.

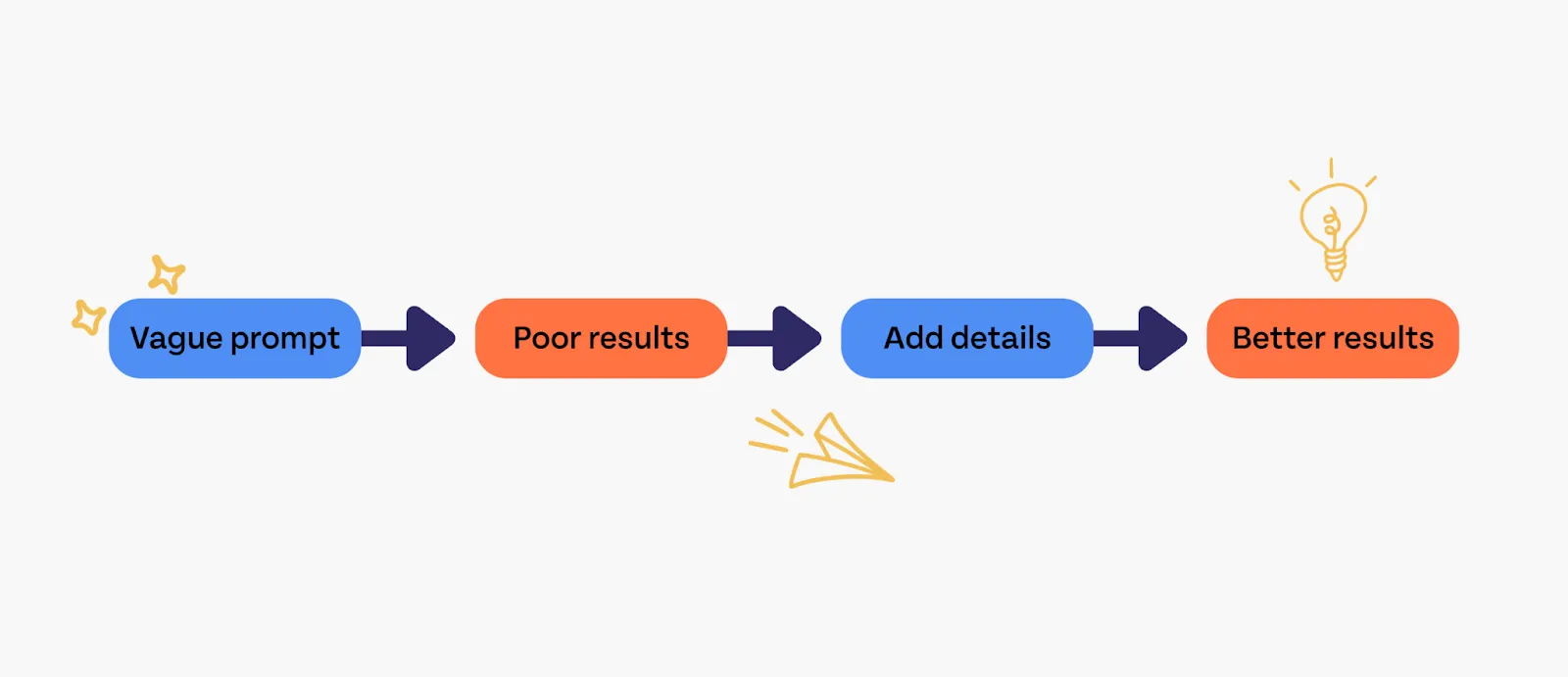

If you’ve ever used an AI to generate a written response, a graphic, or even code, you know how important it is to be specific in your prompts.

Let’s say you put this prompt into an LLM: “Write an outline for a lesson plan on the nervous system.”

This might generate a helpful response, but if this were your prompt, you might notice that what the AI generates isn’t grade-appropriate or that it covers too little of what you were wanting to cover. So, you try again:

Write an outline for a lesson that will be taught to 11th-grade Advanced Placement Students in Anatomy and Physiology. The lesson will be on the parts of the nervous system. They should be able to use critical thinking, scientific reasoning, and problem-solving. They must communicate and apply scientific information extracted from various sources, such as current events, news reports, published journal articles, and marketing materials, per the Texas Essential Knowledge and Skills (TEKS). The outline should include an introduction, an activity, and an opportunity for reflection. Ensure the activity asks students to communicate and apply information on the nervous system using various sources, as listed in the TEKS.”

In this scenario, you noticed that your prompt didn’t return an ideal response, so you engineered it to be better.

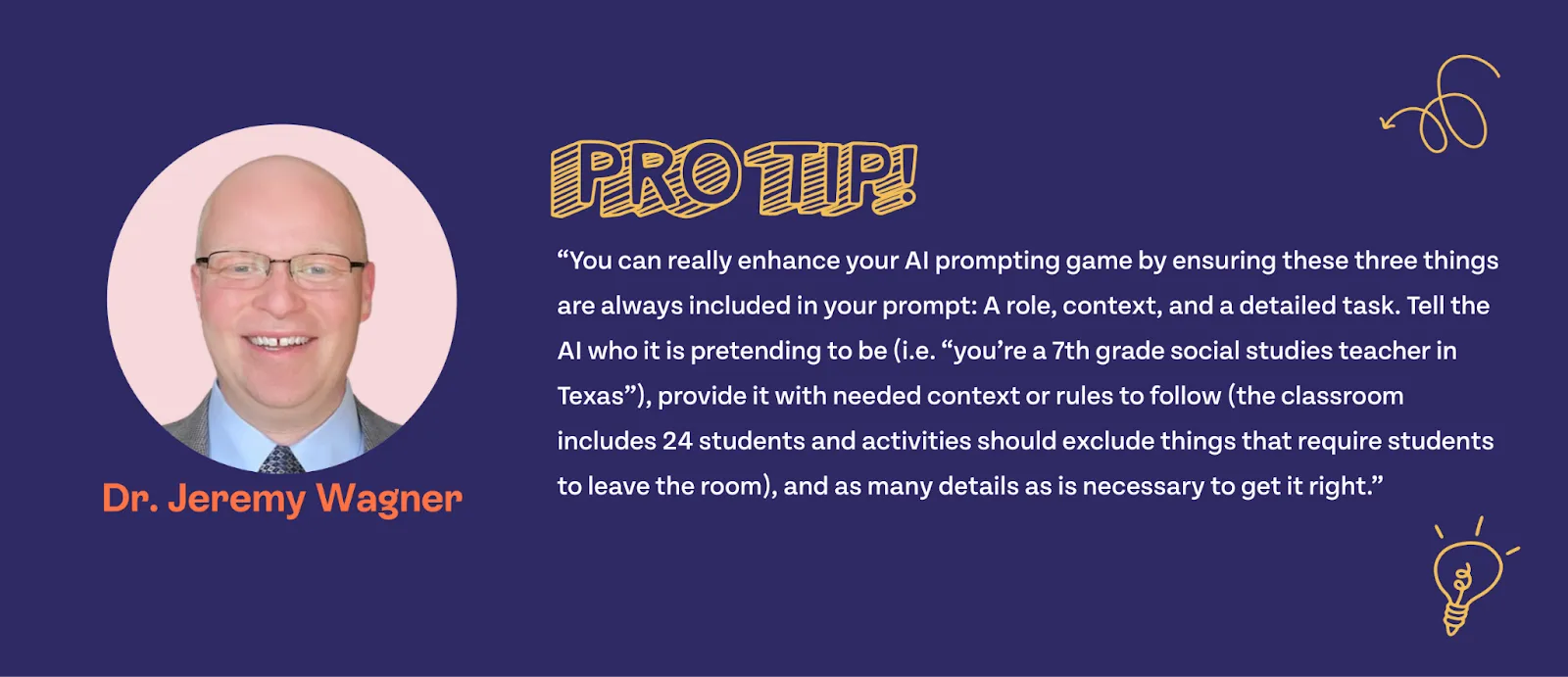

Prompt engineering is exactly this! You engineer your prompts by inputting specific information that might help with audience, tone, and even accuracy.

Many people believe that this is a skill that both teachers and students will have to know to be successful in the coming years. One CNBC report revealed that 96% of executives felt an urgency to incorporate AI into their business operations, and 70% of leaders won’t hire someone without AI skills. Unfortunately, the onus is on the individual to learn how to use it, as only 25% of these employers plan on offering training in these areas.

If AI is going to stick around for the long term, then it will be important to learn the most effective way to engineer a prompt. For now, here’s a resource that can help with prompt engineering.

People are getting more curious about the processes that technology uses to generate a response! After all, if you’ve spent any time using popular AI programs, you might feel that it’s not all that different from an algorithm.

For example, classic spell-check tools were algorithms. They used a saved version of a dictionary to identify misspelled words and tag them for correction. They had been engineered to perform a specific function. Until it was updated by an engineer, it would continue to function in exactly that same way.

These days, spell check is often “powered” by AI. For example, Grammarly (an AI-powered spell-checker) may identify opportunities for corrections based on the AI’s interpretation of certain rules or styles. It may even gear its suggestions based on what many people accept or reject when presented with similar suggestions.

Engineers continue to develop and update these tools, but the tools themselves constantly evolve and learn based on the input they receive every day.

Simply put, Algorithms are coded and engineered to perform a certain function. AIs are “trained” on data to perform a certain function in a way that mimics human intelligence. AI is constantly evolving based on the input it receives, whereas algorithms need to be manually updated to adopt new functions.

It may be difficult to tell the difference between these two at times; however, for the most part, tools that use AI will be labeled as such. It’s extremely marketable to use AI in this day and age, so there wouldn’t be much reason to keep this information a secret.

Many educators are concerned about the long-term ramifications of AI, especially LLMs, on metacognition, learning, and creativity.

While there haven’t been many studies on the effects of AI on learning just yet (often that kind of research is longitudinal and takes years to draw hard conclusions), there are some clear benefits and drawbacks to keep in mind in terms of its use in the classroom.

Benefit #1: AI is a powerful accessibility tool for people with disabilities.

Many people cite “the blank page” as a major barrier for students with disabilities. While this is just one example, it’s a great visual demonstration of how AI can help “level the playing field,” in tasks as small but essential as written communication.

For example, Autistic people and people with Attention Deficit Hyperactivity Disorder (ADHD) often have difficulty with task initiation. Because it is difficult to start or transition between tasks or even exert effort on something they consider difficult, AI can help them get words, thoughts, or ideas down, so the task feels more manageable.

Benefit #2: AI can facilitate exploratory exercises for project-based learning.

Many people use AI as a replacement for Google. While this isn’t necessarily the best practice, they’re near to an idea that could be extremely useful: immersive topic exploration.

Let’s say you’ve assigned a project-based learning unit. These are often based on things that students consider to be personally important or relevant. For many students, it may be difficult to find things they genuinely care about that align with their existing interests. This is where AI can help facilitate.

Through prompt engineering, students can explore topics of interest that can guide them down learning paths. For example, “I’m a tenth-grade student who loves crafting. I also love animals. What are some ideas of things that I could do to help my community that align with my interests?”

It would be silly to put this into Google search (prior to Gemini), but AI could use this context to compile information in a way that is more accessible than a list of search results.

If you do use this idea, be sure to refer to #1 on this list to make sure that your AI tool protects your students’ information.

Drawback #1: Students may not be making the best use of the tools.

In general, students cheat. However, the prevalence of AI doesn’t seem to increase cheating behaviors; rather, it’s just another means for the kind of academic dishonesty that’s always present in a high-pressure environment. A much larger concern than cheating (and students’ overreliance on machines to do the thinking for them) is the proper use of AI tools to help them learn.

There have been many studies on why students cheat.

Many students “cheat” or use tools dishonestly when they aren’t sure whether what they’re doing actually constitutes cheating. Clear expectations and procedures can solve this.

According to the research linked above, the classroom environment makes a significant difference in whether students cheat at all. For example, if the teacher is engaged in the learning experience and sets clear expectations, students are less likely to cheat. If students feel that they can’t approach their teacher for help, they’re more likely to cheat. If students feel like their teacher isn’t teaching the subject well? Yeah, they’re more likely to cheat.

How students use tools and engage with assignments largely depends on the classroom environment. So, while it’s understandable to be concerned about the effects of these tools on cognition and academic dishonesty, for now, we have the solution.

Good ol’ fashioned high-quality teaching makes a world of difference.

Drawback #2: The way students use AI reflects a larger problem.

Did you know that students are increasingly using AI as their friend or counselor? While many efforts have been made to increase the effectiveness of AI in counseling, there’s a larger concern here. Gen Z and Gen Alpha students have been in the midst of a mental health crisis that seemingly won’t let up.

Gen Z is currently being referred to as the loneliest generation. Social media has done a number on them, and we’re still reeling as 21st-century people as we try to figure out a way to protect them from it.

Here’s the thing…students who are suffering from Anxiety and Depression probably aren’t learning or performing well.

Our students crave connection. They want to be seen, and they want to be heard. So, while we should still give deep consideration to the effects of AI on our students, especially their ability to think and process problems, we can be a strong foundation in their lives. As their teachers, support staff, and administrators, you can help them feel connected to their community; you can help them think through problems and have the skills to process them in a healthy way; and you can let them know that they matter.

A mixed bag of benefit and drawback: AI reduces brain activity

A recent MIT study was published showing that we use less of our brain when we rely on AI. This information has already been used to hypothesize and catastrophize the long-term effects of AI on developing brains.

However, experts in the community have already pointed out that this study doesn’t differentiate based on whether the participants had neurodevelopmental differences, such as ADHD and Autism.

People who already struggle with equal access to education will experience AI differently than those with normal executive functioning (the ability to make decisions, complete tasks, and transition between activities). Whereas someone with a neurological difference may be dealing with a massive amount of stimuli, thoughts, and worries that hold them back from participation in an assignment, a student in the norm can command focus and attention to complete assignments. They live without the added burdens experienced by brains that are outside of the norm.

If AI reduces brain activity, then for those in the classroom whose brain activity is always above the norm, this might still be a tool that levels the academic playing field, making the impossible seem within reach.

The words “best practices” are thrown around a lot, but how often are they explicitly defined? Best practices evolve often and are based on research. Today, some best practices involve:

- Using data to personalize instruction, intervention, and enrichment

- Teaching subject-specific vocabulary to ground students in new knowledge

- Defining objectives before teaching a lesson or starting an activity

- Using multiple methods and forms of assessment to accurately assess student progress and achievement

There are many more “best practices,” but you get the idea. These practices often take a great deal of skill, attention, and effort. So, you can begin to see how AI may seek to replace some of these practices with tools, sometimes more or less successfully.

For example, if defining objectives is a best practice but perhaps time-consuming to articulate if you’re learning and practicing multiple standards, AI may be a good way to condense and articulate objectives. This is an effective use of AI because it reduces time-consuming tasks without taking attention away from the student.

In the words of Dr. Jeremy Wagner and Dan Lien, two of our AI experts at Eduphoria, “AI should be your assistant, not your replacement.”

Just imagine your AI tools as your maid. What chores can you pass on to them to lighten your workload?

Here are some examples of chores that AI or other software may be able to help with that don’t attempt to replace you as the teacher:

- Automatic scoring

- Seating chart creation

- Resource summaries

- Alternate wording suggestions

- Technical vocabulary identification

- Possible student question generation

- Spelling lists

- Outlines

- Idea generation

- Station group creation

- Randomization

Menial tasks are safer to hand off to an AI than tasks that require specific training and expertise. (Just be sure not to put a student’s personally identifiable information into an AI.)

We have to ask, “What is the ultimate goal?” when we take on tools to lower our workloads.

Obviously, reducing burnout and getting time back into your schedule are top priorities, but ultimately, the aim is to reduce the number of time-consuming administrative tasks so that educators can spend more time with their students.

AI should not replace relationship-building, intervention-forming, data-centric decisions because those are the things that really matter.

If teachers had as much time as they’d like to teach and help students, what would they spend their time doing? Those things should stay in your hands.

There are a lot of complicated ethical considerations when it comes to using AI. Some of them have already been mentioned in this resource, including that some of these LLMs were trained using stolen content.

Here are some examples of ethical “sticky areas” that may need some deeper thought:

- Am I using stolen content to create a unique resource?

- Am I using AI to create shortcuts that may accidentally harm student learning or engagement? (Refer to the previous section)

- Am I paying as much attention to best practices and standards as I need to create a robust learning resource?

- Is the resource that I’m generating high-quality, accurate, and factual?

- Is the resource that I’m creating facilitating my own creative process rather than replacing it?

Let’s break some of these down into more detail.

“Am I using stolen content to create unique resources?”

AI is often trained on large sets of data. If you asked a Large Language Model to emulate the style and voice of The New York Times, it would do this rather effectively because it has “consumed” thousands of articles in the style and voice of The New York Times.

You could ask AI to emulate the style or voice of just about anything or anyone. You could even create fake resources in the voice of famous writers. Say you’re doing a unit on Mark Twain and wanted to create a short story in his voice that reflected the modern experience of a teenager.

Mark Twain’s works are in the public domain, so this would be a really interesting way to use this feature. Now, say you want to create a resource that mimics the voice of a modern-day writer who didn’t consent to the use of their works for training AI. This is much more of a gray area that is probably best avoided, as their intellectual property very much includes their tone, their voice, and the way that their writing is uniquely them.

Aside from legal considerations such as copyright, there are moral considerations as well. Artists of all kinds spend years developing their “style.” An artist’s style is a combination of time, effort, and skill. Skill can only be developed through hundreds, if not thousands, of hours of practice.

For example, AI can now create pictures in the style of Studio Ghibli, a renowned movie studio with a recognizable art style and a beloved manner of storytelling. This style was developed and honed over decades and can easily be distinguished from other animation studios.

For writers, their style is their “voice.” There are a few modern writers whose voices are distinct, including Stephen King, Terry Pratchett, and Toni Morrison. None of these writers is in the public domain, so their works are legally off-limits, but morally?

AI can create artworks in the style of Studio Ghibli or even whole novels in the voice of Stephen King in an instant. Forget the decades of work and painstaking development of skill it took to bring these styles into existence.

It’s theft for a person to steal the decades-long expertise of other artists and claim it as their own, so…what’s the difference with an AI?

You have to come to your own moral conclusions, but you may want to avoid asking AI to imitate specific people from modern day. Asking it to use a certain tone of voice or engineering your prompt to create a photo that doesn’t explicitly copy a certain style is probably all right.

“Is the resource that I’m generating high-quality, accurate, and factual?”

This one is pretty easy to figure out. If you use AI to generate an overview of a specific historical event, perhaps, then it would be responsible and ethical to fact-check the overview, add details that you believe are pertinent, and make sure that it’s free of biases that could impair learning and engagement. Consider asking the AI to provide the resources it used to generate the response, check those, and verify that what it’s saying is accurate as well.

You are the subject matter expert, so make sure you’re using your expertise to rein in the machine! If you’re not sure what to fact-check when it comes to AI-generated resources, here’s a helpful bullet list:

- Names

- Dates

- Locations

- Biased wording

- Sequential order

- Sources of information

If something doesn’t feel right, check it. Trust your gut.

In a similar vein, “Am I putting as much attention into best practices and standards as I need to create a robust resource?”

If you feel that you’re not, then you’re probably not. Treat the resources you create as if they’re not up to standard. How will you check for quality? Read it over with your unique eye, your subject matter expertise, and your knowledge of best practices. If something seems off, fix it.

Would you rather go through an automatically generated resource and add your own analysis of how it assesses the standards? Then by all means, do it! Would you like to make a list of interventions, best practices, and teaching strategies that coincide with the resource you generated? That’s a great idea!

Don’t forget that you are the expert, not the AI.

“Is the resource I’m creating facilitating my own creative process?”

Generating an entire resource with no human intervention is a great example of AI replacing you rather than assisting you.

You are better at your job than AI, so put some reins on that thing and get it in line! What are some things that you hate doing?

For me, to-do lists are very helpful, but I hate making them. This is a great use for AI. I can have it make the list for me, break it down into individual tasks, and even generate suggestions for things to do within that list, but then it’s entirely up to me to execute the tasks in only the way that I can.

If you feel like AI is stifling your creativity and autonomy, then it is.

This has been touched on throughout the piece, but if you’re unfamiliar with AI as a whole, then it might be worth listing all of the ways that our more proficient students are using it.

It’s worth repeating that students aren’t using AI, specifically LLMs, to cheat as often as you think they are. Most students know that using AI to generate entire assignments isn’t okay. According to surveys and research, students most often use AI for tasks like these:

- Getting information (Harvard)

- Brainstorming

- Help with homework

- Getting advice

- Exploring topics

- Supporting creative thinking

- Make sounds or music

- Generating images

- Writing code

- Writing first drafts (Forbes)

- Finding academic sources

- Inspiration

- Clerical work, such as scheduling or emails

- Math help

And the list goes on.

So, why is this helpful for you to know? For one thing, it might give you an idea of how you can use AI for your own processes. For another, it can help you speak their language, create assignments, and support their processes. If you know that students are using AI to get math help and you’re a math teacher, have a student ask AI their question first, then explain the answer or solution back to you in their own words. You can, then, tell them whether the answer was accurate, add details, and support that individual student.

Generally, when we consider whether something is “bad” for the environment, we ask these questions:

- Does this use or redirect essential resources?

- Does this affect air quality or contribute to the depletion of the ozone layer?

- Does this generate waste? Especially non-recyclable waste?

- Does this negatively affect certain ecosystems and/or disrupt the life cycle?

- Does this put toxic chemicals into the environment?

- Does this exacerbate existing climate concerns?

Now, if we want to assess AI on these terms, we can talk about specific ramifications for widespread adoption of AI services.

Yes, AI uses or redirects essential resources such as valuable minerals, electricity, and water (MIT). Most technology does.

Yes, in a sense, AI affects air quality in that electricity is still generated by burning fossil fuels in many parts of the world (MIT). Burning fossil fuels does affect air quality and deplete the ozone layer.

AI does generate waste. The machines that support its processes will eventually have to be trashed and replaced, meaning that AI does contribute to the growing e-waste issue we face in the modern age (MIT).

AI may negatively affect certain ecosystems depending on where servers are located and how much fossil fuels are being used to run AI processes. Long-term, the answer is absolutely yes.

AI does put toxic chemicals into the environment both through emissions and through e-waste which may leak hazardous materials into the ground and water near landfills (Earth).

AI does exacerbate existing climate concerns by the sheer quantity of electricity it needs to function and the processes which create electricity.

Some additional considerations for the role of AI and technology in climate change

One of the reasons that AI is getting more attention in terms of its potential effects on the environment is because of the extravagant amounts of electricity it uses compared to other technologies. Technically speaking, a simple Google search is bad for the environment in these same ways, but a Google search uses significantly less energy than a query to an LLM.

All technology harms the environment in some way. Does that mean we should shun technological advancement entirely and shut down the server farms for good? Probably not, but we should definitely be mindful of the ways we use these resources, as there are visible and invisible costs for the convenience that AI provides.

One simple way to reduce the environmental cost of AI? Stop saying thank you to the AI.

This is another complicated topic. Teachers, understandably, have a lot of angst about using AI. Not only are teachers being expected to integrate AI into their practices without any training or guidance, but they’re being asked to teach students AI practices when they hardly understand it themselves (Education Week).

Despite this, most experts believe that students need proactive education about how to safely and effectively use AI. AI is not only rapidly evolving the workplace and education, but it’s also changing expectations in terms of self-sufficiency, research, and adaptability. At the very least, students should learn how to use AI to make life easier for themselves, so they can direct their energy toward their interests, passions, and community.

So, where does this leave us?

Students need AI education, but the burden shouldn’t be entirely on the classroom educator. Schools need to provide robust professional development on how teachers and admins can use AI for themselves, and each school should have an AI expert to help them navigate the evolving culture, expectations, and uses of AI.

Schools should be able to foresee the potential risks and consequences of AI before integrating it thoroughly into the frameworks of their schools, and to do that, they’ll need to foster that expertise in their communities or build relationships with AI experts in education.

Start slow, adopt safely, and provide learning opportunities so teachers can do what they love most: support students. The burden of AI education needs to be shared, expectations should be explicit, laws should be considered, and experts should be included in every step of the process.

Subscribe to our newsletter to get notified when we publish resources like this one

If you’re interested in the evolving world of ed tech, you should subscribe to our newsletter using the “stay up to date” toolbar on this page! We’ll continue to thoughtfully discuss AI as it evolves, but you can always expect guidance on how to use education software, tools, and best practices to support student growth.

.webp)

.png)

.png)